Everybody is talking about “AI” but few know what it is or its implications. My introduction to it has been largely positive. I have had “smart” devices in my apartment for over a year, “controlled” by Alexa from Amazon – though I have configured her to use a man’s voice, so you might say my Alexa is transgender! When I conceived of and set my system up, convenience was my motivation. Being physically disabled, I wanted the ability to control my thermostat or turn on the lights with just my voice – in other words, without having to get up (which is difficult for me). So I could say things like “Alexa, what is the thermostat set for?” and then, based on the answer, give commands like “Alexa, set the thermostat to heat to 77 degrees.” And he would do just that.

In the last couple of months, Amazon has rolled out what they call Alexa+, and I have upgraded. The promotional material promised a more natural conversation style, which I welcomed as an improvement. Instead of having to preface each query or command with the “attention word” (Alexa), which can become quite tedious, this new release was said to make interacting with the device more like a dialog; so, “Alexa, what is? … Alexa, do this. … Alexa, do that.” would be replaced with a more natural flow of getting his attention once with an initial spoken “Alexa” and holding it until I told him I was done or a period of silence elapsed, his attention indicated by an animated blue line on the face of my Echo Spot.

But this new, next generation Alexa was not just more conversational, it was “smarter.” Previously, to raise the temperature in my apartment, I had to do the thinking: find out the numeric value of the temperature, and then give commands like “set thermostat to heat” and “set 77 degrees;” I figured out what needed to be done to increase my comfort, and told Alexa what to do to bring it about. Think about that: I decided what I wanted (to feel warmer), and told Alexa precisely what to do to make that happen.

Sat here this morning writing at 5:30 am, it is 57 degrees out. Even inside, it feels a bit chilly. So I decided to test Alexa. All I said was, “Alexa, I am cold.” After a brief pause, my heater came on and Alexa responded, “I have raised the Apartment [name I configured my thermostat to use]temperature by two degrees.” Based on that response, we can surmise that Alexa has the intelligence to:

- Know when someone is cold they want to be warmer

- The way to warm them up is to increase the temperature

- A heater is used to do this

- You use a thermostat to control a heater, and

- Matt’s thermostat is called Apartment and can be accessed electronically over his internal, private wi-fi network

Moreover, because the heater was off when I complained I was cold, Alexa had to understand the concept of “on” and that the device (the heater) used to bring about the desired outcome would be useless until it received power (i.e., was turned on); there’s no sense raising the thermostat by two degrees if the device it controls is off, and to reach that insight Alexa would have to know what off means.

So over in the corner of my room, sitting on the table next to my bed, is something that looks like a little blue bedside clock but is actually thinking for itself and taking action. For the time being, Alexa only does his thinking and acting in response to a prompt from me. But the fear that I, and a lot of people, have is what happens when AI like Alexa starts thinking and acting on its own, without human input?

For example: I prefer to sleep cold, not freezing, but cool. Which explains why my heater was off overnight. And when I got up and started writing this post, I felt uncomfortable and wanted heat – so even though I let Alexa figure out how to make me warmer, we’re still at a point where Alexa did nothing until I wanted him to. There are plenty of modern conveniences that allow you to set timers for things to occur at certain times; coffee brewers first thing in the morning are indicative of what I’m talking about here. But you, meaning a human, still has to set the timer the night before, put grounds and a filter in the basket, water in the reservoir, etc. You aren’t getting coffee in the morning because the coffee maker decided on its own that is how you like to start your day.

The third Terminator movie was subtitled “Rise of the Machines.” And before that we had the homicidal computer known as Hal in 2001: A Space Odyssey refusing Dave Bowman’s orders because Hal determined those orders contradicted his programming.

In both cases, something created by humanity for ostensibly benign purposes has, thinking on its own using the ability we gave it to do just that, turned against its creators. I think this is what concerns people about AI. What if we lose control?

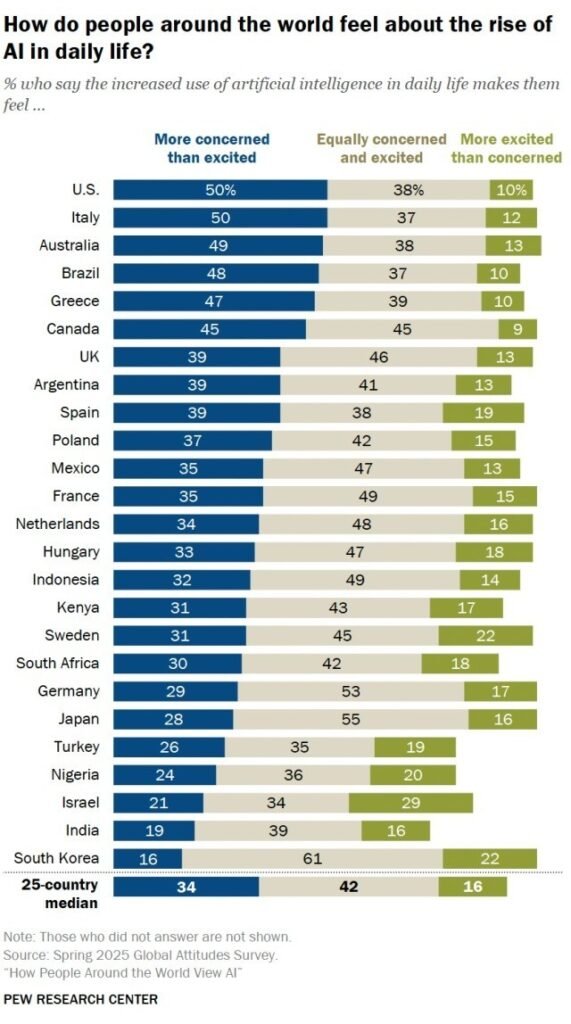

People around the world are feeling more concerned than they are excited about AI’s growing presence in everyday life. According to a Pew Research survey conducted in the spring of 2025, 50% of Americans were more concerned than excited, while 38% are equally concerned and excited and just 10% are mostly excited. The only country to have fewer citizens who say they are mostly excited than the US is our neighbor to the north, Canada, at 9%. The most excited country is Israel at 29%, followed by South Korea and Sweden, both coming in at 22%, and then Nigeria at 20%.

That’s a pretty slim showing for the excited crowd. But it tells us that the majority of people across the world feel some amount of apprehension – about what, specifically, this study did not explore, but from other sources we may conclude it involves concerns over misinformation, biases, privacy, deepfakes and/or misleading content, copyright and intellectual property infringement, cybersecurity, job loss, and international competition for dominance, among other things.

Still, ChatGPT usage continues to grow, with 800 million people, according to OpenAI, talking to the chatbot every week. It’s one of the top five most-visited websites in the world!

Remember when that statistic was reserved for porn sites?